On the competition

What would happen if multi-core processors increase core counts further though, does David believe that this will give consumers enough power to deliver what most of them need and, as a result of that, would it erode away at Nvidia’s consumer installed base?“No, that’s ridiculous – it would be at least a thousand times too slow [for graphics],” he said. “Adding four more cores, for example, is not going anywhere near close to what is required.”

But what about Larrabee – do you think Intel will get close to Nvidia with that? “There are no numbers [for Larrabee] yet – there’s only slideware. The way that slideware works is that everything is perfect.”

What if Nvidia has underestimated Intel though and they build an efficient microarchitecture that scales really well in graphics? “I’m not going to get into all of the details especially for Larrabee, but they’re missing some pretty important pieces about how a GPU works. Without being too negative, we see Larrabee as the GPU that a CPU designer would build, not the GPU you’d build if you were a GPU designer.”

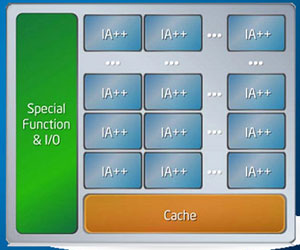

Larrabee is Intel's discrete graphics product and is the

first fruit from its terascale research project.

Again, David came across a little blunt, but then he sounded pretty confident about what’s going on inside Nvidia now – he’s probably a long way through the development of products that will hit the market in the same timeframe as Larrabee, but the thing is that Larrabee is an unknown quantity at the moment. David finished his deconstruction of Larrabee by pointing out that “[Intel has] been building GPUs for ten years now and they’ve yet to even match our mid-range, never mind our high-end.”

So if he’s confident that Nvidia won’t see much competition coming from Intel for a while, how does he see the threat from AMD? “Well, ATI’s CTO left about six months ago and AMD’s CTO left a week ago. Now, what does that say when the chief technical person at a company quits?” asked Kirk. Well, he was a part of the 10 percent job cut and his role is being split across the individual departments, I said.

R600 wasn't ATI's greatest invention and the company

hasn't really caught up since.

“AMD has been declining because it hasn’t built a competitive graphics architecture for almost two years now—ever since the AMD/ATI merger. They’ve been pulling engineers [from the GPU teams] to Fusion, which integrates GPU technology onto the CPU. They have to do four things to survive, but I don’t think they have enough money to do one thing.

“The first thing they have to do to compete with Intel is the process technology – they have to build the new fabs. The second thing is the next-generation CPU technology. The third one is the next generation GPU technology—we’re going to invest one billion dollars in here this year and they need to invest on the same level to keep up with us. And then the fourth thing is they say the future is going to be this integrated CPU/GPU thing called Fusion, which there’s no evidence to suggest this is true but they just said it. They believe it and they’re now doing it.

“So they have to do these four multi-billion dollar projects, they’re currently losing half a billion dollars per quarter and they owe eight billion dollars. Their market cap is about three billion, so it’s hard to see where the future is in that picture. Really speaking, they’re going to have to pull not one, but several rabbits out of the hat.”

It's hard to argue that what Kirk's saying is wrong as the task ahead for AMD is pretty massive considering it is just bleeding money every quarter at the moment. It doesn't make great reading for AMD and ATI fans out there, but it'll be interesting to hear what AMD has to say about the dull future outlined by Kirk.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.